You might have noticed the frenzy of AI leaders and tech CEOs travelling around the world, and Europe in particular, to meet Presidents and Prime Ministers to discuss the impact of AI not only on businesses but most importantly on society. The EU has been at work to adapt an existing legislation proposal and it seems it is poised to be the first governmental entity to legislate on AI. In this chapter of Chronicles of Change, we take a deep dive into how this proposal came about and what it means for your business.

Featured Insight: EU Regulation on AI

The upcoming EU regulation on AI, known as the AI Act, is considered a landmark legislation as it would be the world's first set of rules on Artificial Intelligence. It was developed with the intent to ensure a human-centric and ethical approach to AI, emphasizing the importance of transparency, safety, non-discrimination, and environmental friendliness. The rules are designed to be technology-neutral, allowing them to be applicable to both current and future AI systems.

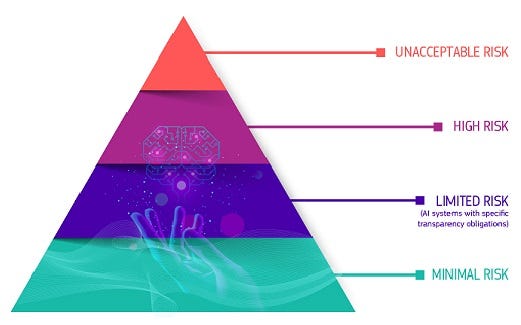

One significant aspect of the proposed regulation is its risk-based approach. This categorizes AI systems based on the level of risk they pose, and subsequently, the extent of regulation they require. For instance, AI systems that present an unacceptable level of risk to people's safety are to be strictly prohibited. This includes systems that deploy manipulative techniques, exploit people's vulnerabilities, or are used for social scoring. The legislation further seeks to ban the use of AI systems in certain areas deemed intrusive and discriminatory, such as real-time remote biometric identification systems in public spaces, emotion recognition systems in law enforcement, and indiscriminate scraping of biometric data from social media or CCTV footage.

Let's delve into each risk category:

Unacceptable risk: These are AI systems that are strictly prohibited because they pose a clear threat to people’s safety, livelihoods, rights and dignity. For example, AI systems that manipulate human behaviour, opinions or decisions through subliminal techniques or exploit vulnerabilities; AI systems that allow social scoring by governments; AI systems that enable real-time remote biometric identification in public spaces1.

High risk: These are AI systems that can have a significant impact on the life chances of a user or that affect fundamental rights or safety. For example, AI systems used for recruitment, education, access to credit, migration, law enforcement, judiciary, healthcare, transport, energy and critical infrastructure12.

Limited risk: These are AI systems that pose specific transparency obligations to ensure that users are aware that they are interacting with an AI system and can make informed decisions. For example, AI systems used for chatbots, deepfakes or emotional recognition12.

Minimal or no risk: These are AI systems that are free from any obligations under the AI Act because they pose little or no risk to human rights or safety. For example, AI systems used for video games, spam filters or email categorisation2.

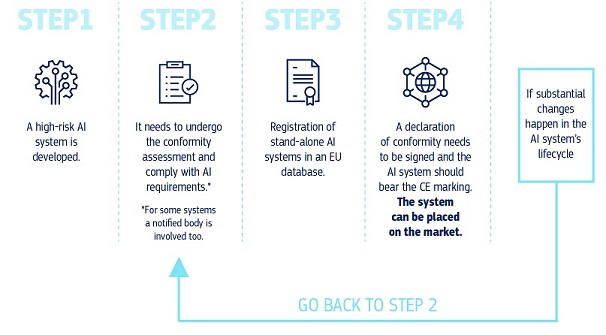

High-risk AI systems are given special attention in the proposed regulation, with the classification of high-risk areas expanded to include harm to health, safety, fundamental rights, or the environment. Specific AI systems, such as those that could influence voters in political campaigns or recommender systems used by large social media platforms, are also added to the high-risk list.

Another notable feature is the rules for general-purpose AI, particularly "foundation models" like GPT. Providers of these models would have to ensure robust protection of fundamental rights, health and safety, the environment, and democracy and rule of law. They would also be required to comply with additional transparency measures, such as disclosing that the content was generated by AI, designing the model to prevent it from generating illegal content, and publishing summaries of copyrighted data used for training.

The proposed regulation aims to balance the protection of fundamental rights with the need to provide legal certainty to businesses and stimulate innovation in Europe. To achieve this, the legislation includes exemptions for research activities and AI components provided under open-source licenses. It also promotes regulatory sandboxes for testing AI before deployment. Importantly, the legislation also seeks to enhance citizens' rights to file complaints about AI systems and receive explanations of decisions based on high-risk AI systems that significantly impact their rights.

Critique

There's consensus that some degree of regulation around AI is necessary, but it's also crucial to note a few points of consideration.

To set the stage, the original draft of the legislation we're discussing dates back to 2018, and was later updated in 2021 to address rising concerns surrounding the use of AI in areas such as social engineering. With the advent of revolutionary AI models like ChatGPT, which sparked global interest, the EU has found itself in a position to urgently adapt and respond to this new generation of AI technologies.

Historically, we've always sought to regulate potential risks. For example, the pharmaceutical industry faces rigorous regulations due to the substantial health impacts it can pose. In more drastic instances, activities like the development of nuclear weapons are either banned or heavily restricted.

The challenge with AI regulation is that, proverbially, the genie is already out of the bottle. Open AI platforms like Hugging Face offer access to over 200k open-source AI models and datasets. Combine this with the availability of affordable cloud-based computing power, and we have a situation where AI technology is accessible to just about anyone with an internet connection and a nominal budget.

The dilemma, therefore, is that those with malicious intent already have the means to misuse AI:

The technology is widely accessible.

It's so affordable that billions of people worldwide could experiment with it.

The delivery mechanism, the internet, poses practically no barriers to entry.

While the intent behind the regulation is commendable, aiming to uphold a human-centric and ethical approach to AI, its execution may inadvertently stifle those aiming to use AI for good. The regulation's scope, currently broad and somewhat undefined, covers any software that utilizes machine learning or similar techniques to achieve an outcome. Its governance structure, which involves multiple layers of authority at both the EU and national levels, could lead to confusion for businesses. Moreover, the legislation lacks flexibility, meaning that if a new high-risk AI application emerges in the next couple of years, it can't be included in the EU's high-risk framework without revising the law.

In essence, while the EU's intent to regulate AI is clear, the specific elements being regulated aren't. Despite the laudable aim of the legislation, its actual implementation lacks focus on practical use cases and fails to provide a clear, actionable blueprint for businesses.

As these regulations continue to take shape, it's crucial for businesses to stay proactive, starting with a comprehensive review of their AI technology and its applications.

The impact on businesses

Regardless of size, all businesses must begin preparations for the forthcoming EU AI legislation, which is set to have a greater disruptive impact than even the GDPR. Now is the time to act, and here's a strategic starting point:

1. Audit Your AI: Establish a comprehensive understanding of the AI models currently in use within your organization. This should include maintaining a traceable audit of how these models were created, enabling you to demonstrate compliance with future regulatory requirements.

2. Risk Assessment: It's crucial to identify where your AI technologies fall within the EU's risk categories. Performing a thorough risk assessment will help you discern between high-risk and low-risk AI applications, aligning your AI strategy with the proposed regulations.

3. Data and Training Transparency: Investigate the nitty-gritty of how your models were trained, and be ready to provide evidence of the lawful origin of the data used. Demonstrating transparency and ethical sourcing in your data practices will be critical in complying with future AI regulations.

4. Embrace Regulatory Sandboxes: Get to grips with the concept of regulatory sandboxes. It's highly likely that these controlled testing environments will become the norm for experimenting with sensitive AI technologies before full deployment. Understanding these now will position you for success in the future.

5. Prepare for Public Feedback: Much like the rollout of GDPR, the introduction of AI regulations will likely stimulate public inquiry and possible complaints. Begin designing processes to effectively handle such feedback, ensuring a smooth transition as the new regulations take effect.

These are just a few initial, proactive steps in a wider transformation journey businesses of all sizes will have to go through in order to ripe the benefits of new technologies, keeping risks at bay and their reputation intact.

Spotlight on: Claude

The gap is quickly closing as ChatGPT 4, once considered superior in quality to its counterparts, no longer holds such a distinctive edge. Over recent weeks, I've been experimenting with a promising contender - Claude, Anthropic's conversational AI, with highly satisfactory results.

A key distinguishing factor between ChatGPT and Claude lies in their capabilities to handle prompts. ChatGPT can manage around 3,000 words, while Claude significantly surpasses this, comfortably processing over 10,000 words. It's important to note, however, that as the length of the prompt increases, the AI model's ability to maintain context may decrease. But, it's certainly worth experimenting with, especially considering its current availability at no cost. An added bonus is Claude's integration capability with Slack, making it a convenient addition to your existing workflow.

Follow me

That's all for this week. To keep up with the latest in generative AI and its relevance to your digital transformation programs, follow me on LinkedIn or subscribe to this newsletter.